Kafka producers are those that publish the message to the Kafka topics, and Kafka consumers consume those messages by subscribing to the Kafka topics. To learn more, please refer Apache Kafka Architecture. In this tutorial, we will learn to create a producer-consumer model using the Kafka console. In this tutorial, we will learn about Kafka producer consumer using Kafka console.

Steps to implement producer-consumer using Kafka console

In order to implement a producer-consumer model using Kafka console we are required to do following steps:

- Create a new topic with kafka-topic.

- Produce the messages with kafka-console-producer.

- Consumer messages with kafka-console-consumer.

Step 1- Start Zookeeper and Kafka server

First of all, we need to start our Zookeeper as well as the Kafka server. To learn how to run Zookeeper and Kafka, please refer Apache Kafka – How to run Kafka?

Step 2- Create Kafka Producer

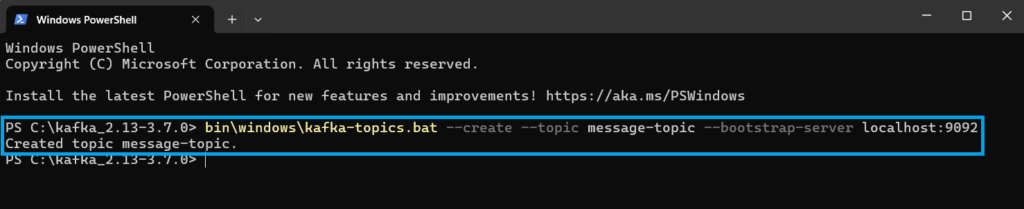

Kafka producers are the applications that publish the messages or events to the Kafka topics. In order to produce or write any messages to the topics, we need to first create Kafka topics. In order to create a Kafka topic, we need to go to the root directory of Kafka, open the terminal, and write the following command:

bin\windows\kafka-topics.bat --create --topic message-topic --bootstrap-server localhost:9092Below, we can see that as soon as we hit the above command, a Kafka topic gets created:

Let’s understand the command in details:

- First, we need to be present in the root directory of Kafka (here, kafka_2.13-3.7.0).

- We are required to execute kafka-topics.bat, which is present inside the bin\windows folder.

- –create –topic message-topic: It indicates to create a topic with a specified name as message-topic.

- –bootstrap-server localhost:9092: The –bootstrap-server specifies that the command line should connect to the Kafka broker that is running at localhost:9092.

Step 3- Produce message using kafka-console-producer

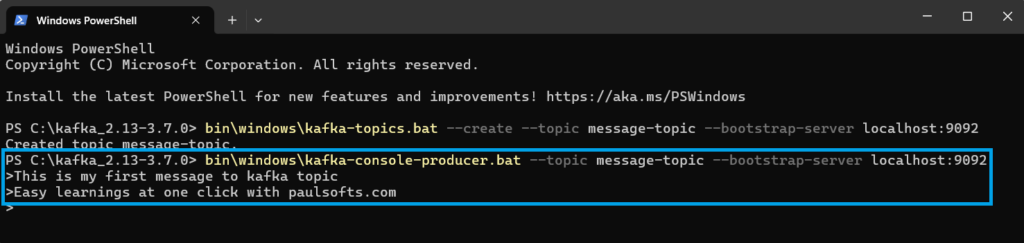

As we have created our topic, we write sample messages to our message-topic. To write messages to kafka topic we will be using the following commands:

bin\windows\kafka-console-producer.bat --topic message-topic --bootstrap-server localhost:9092After executing the above command, we can write any number of messages or events to Kafka topics.

We have executed kafka-console-producer.bat, which is located in the Kafka root directory inside the bin\windows folder. Using –topic, we have specified the topic name in which we want to publish our message, and with the help of –bootstrap-server, we specified that our topic is stored in the Kafka broker that is running at localhost:9092, so that Kafka-console-producer can connect to it.

Step 4- Create Kafka Consumer

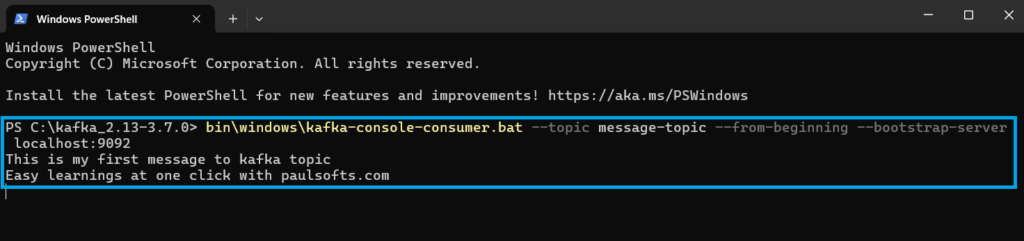

We have added messages or events to the Kafka topic using kafka-console-producer. Now, we will read events from the topic with the help of kafka-console-consumer. We will be using the following command:

bin\windows\kafka-console-consumer.bat --topic message-topic --from-beginning --bootstrap-server localhost:9092Below, we can see that all the messages that we have published to Kafka producers are available to read by Kafka consumers.

Let’s understand the above command in detail:

- In order to consume events from Kafka, we need to run kafka-console-consumer.bat, which is available in the Kafka root directory inside the bin\windows folder.

- –topic message-topic: It is used to specify the topic name from where we want to read the events.

- –from-beginning: This command is used to specify from where we want to read the events.

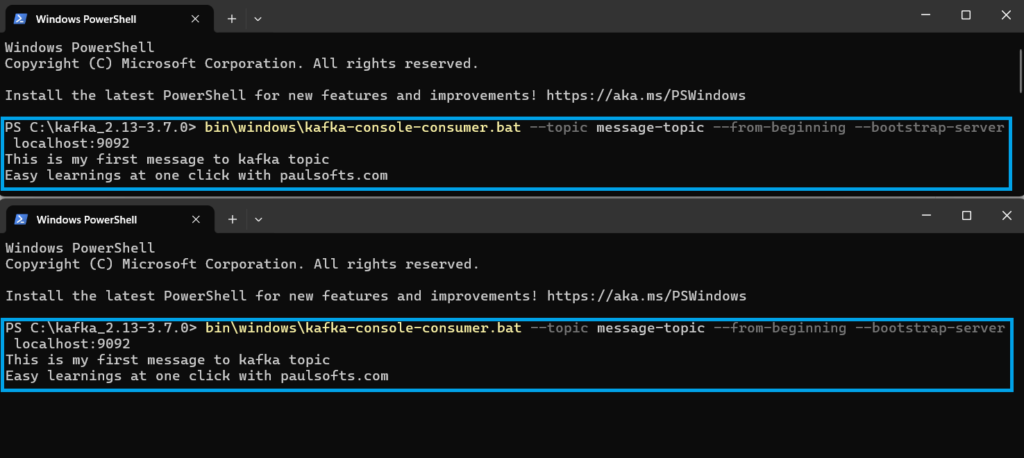

Step 5- Create Multiple Kafka Consumer

We can create multiple consumers that can read data from the Kafka topic at the same time. Below, we can see that we are using two different consumer readings from the same topic .i.e, message-topic.